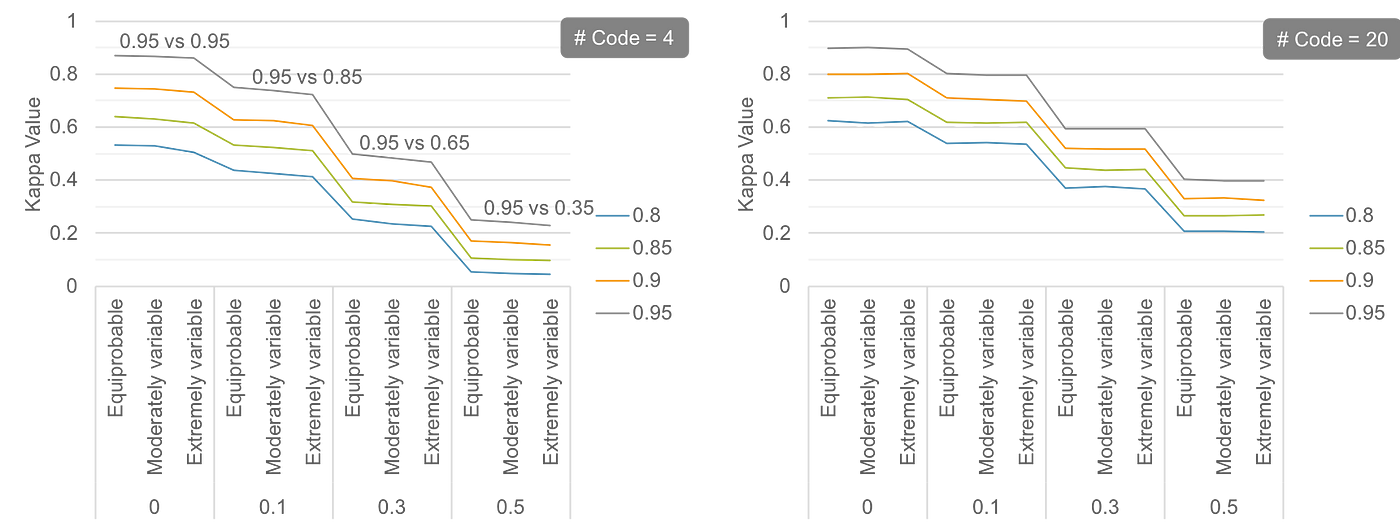

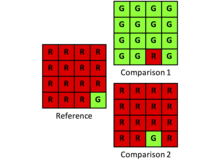

Percent agreement and Cohen's kappa values for automated classification... | Download Scientific Diagram

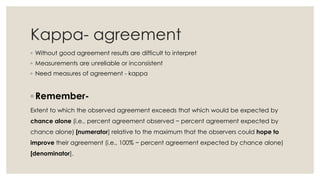

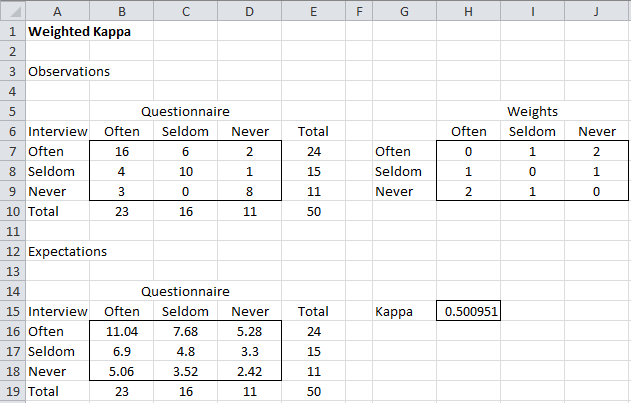

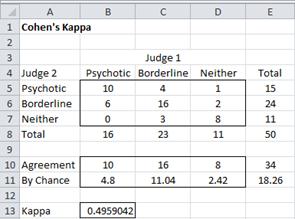

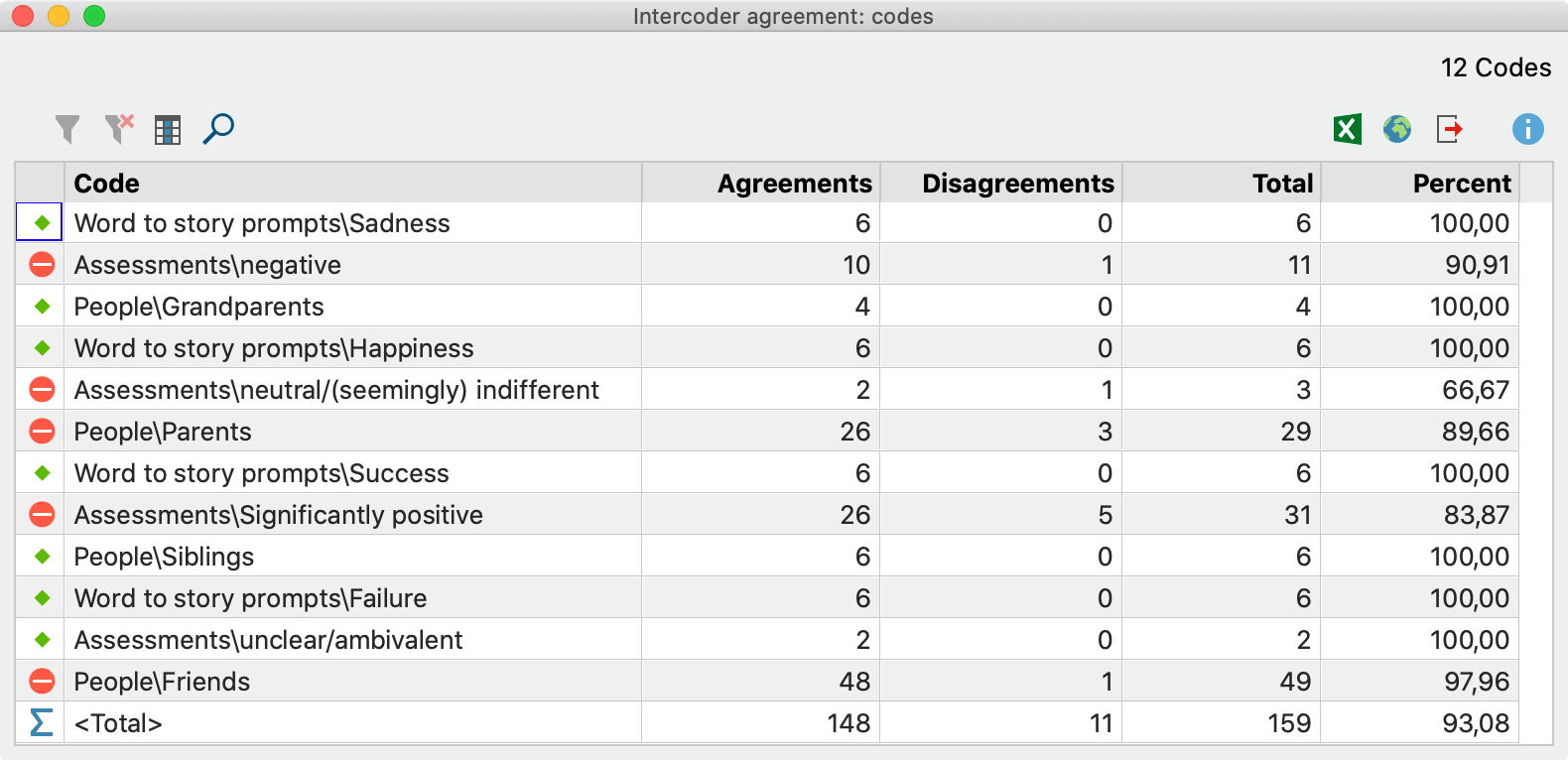

Agree or Disagree? A Demonstration of An Alternative Statistic to Cohen's Kappa for Measuring the Extent and Reliability of Ag

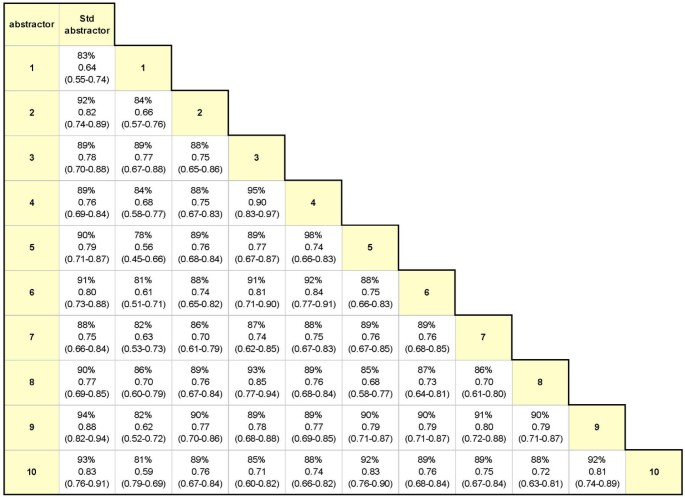

Examining intra-rater and inter-rater response agreement: A medical chart abstraction study of a community-based asthma care program | BMC Medical Research Methodology | Full Text

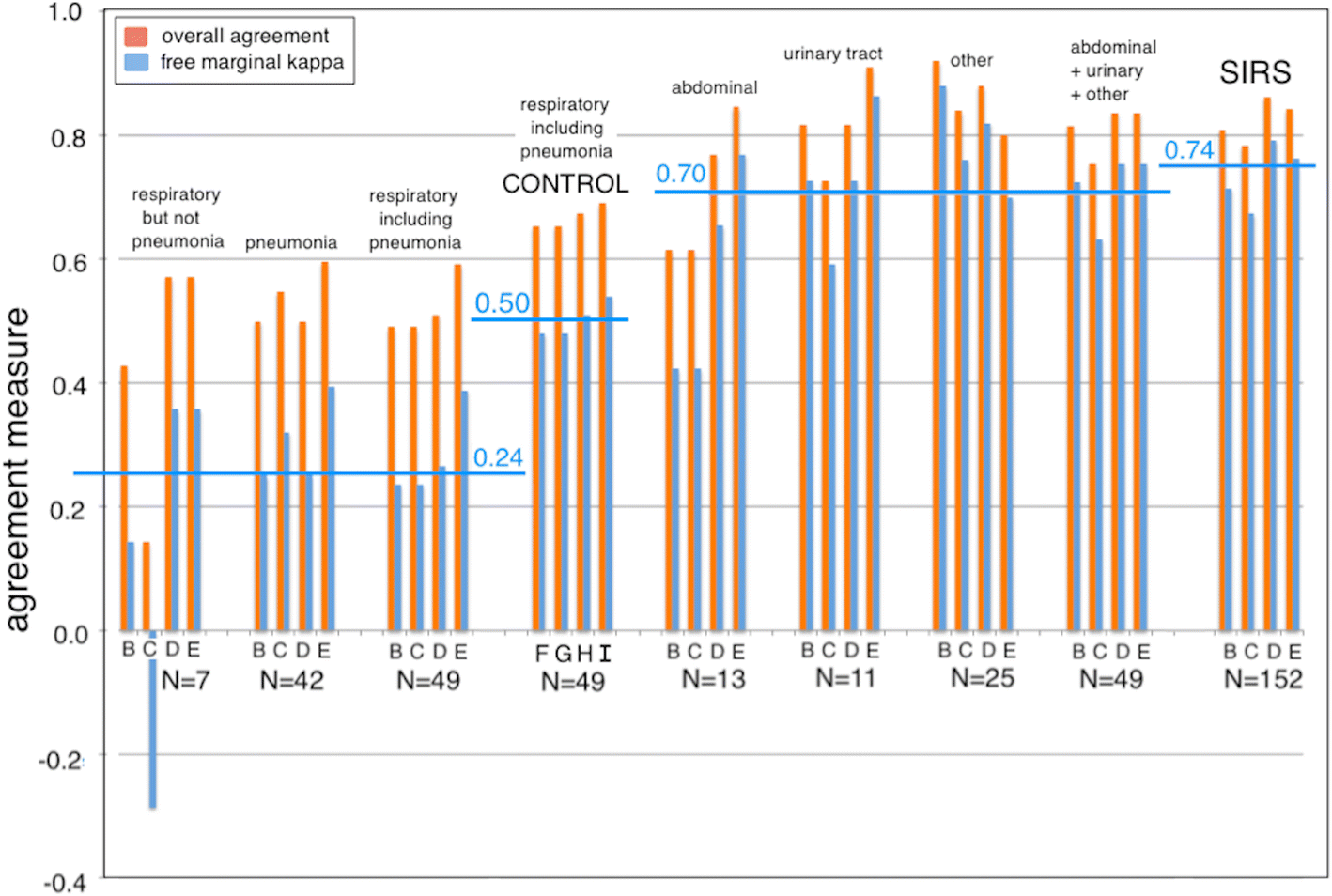

Physician agreement on the diagnosis of sepsis in the intensive care unit: estimation of concordance and analysis of underlying factors in a multicenter cohort | Journal of Intensive Care | Full Text

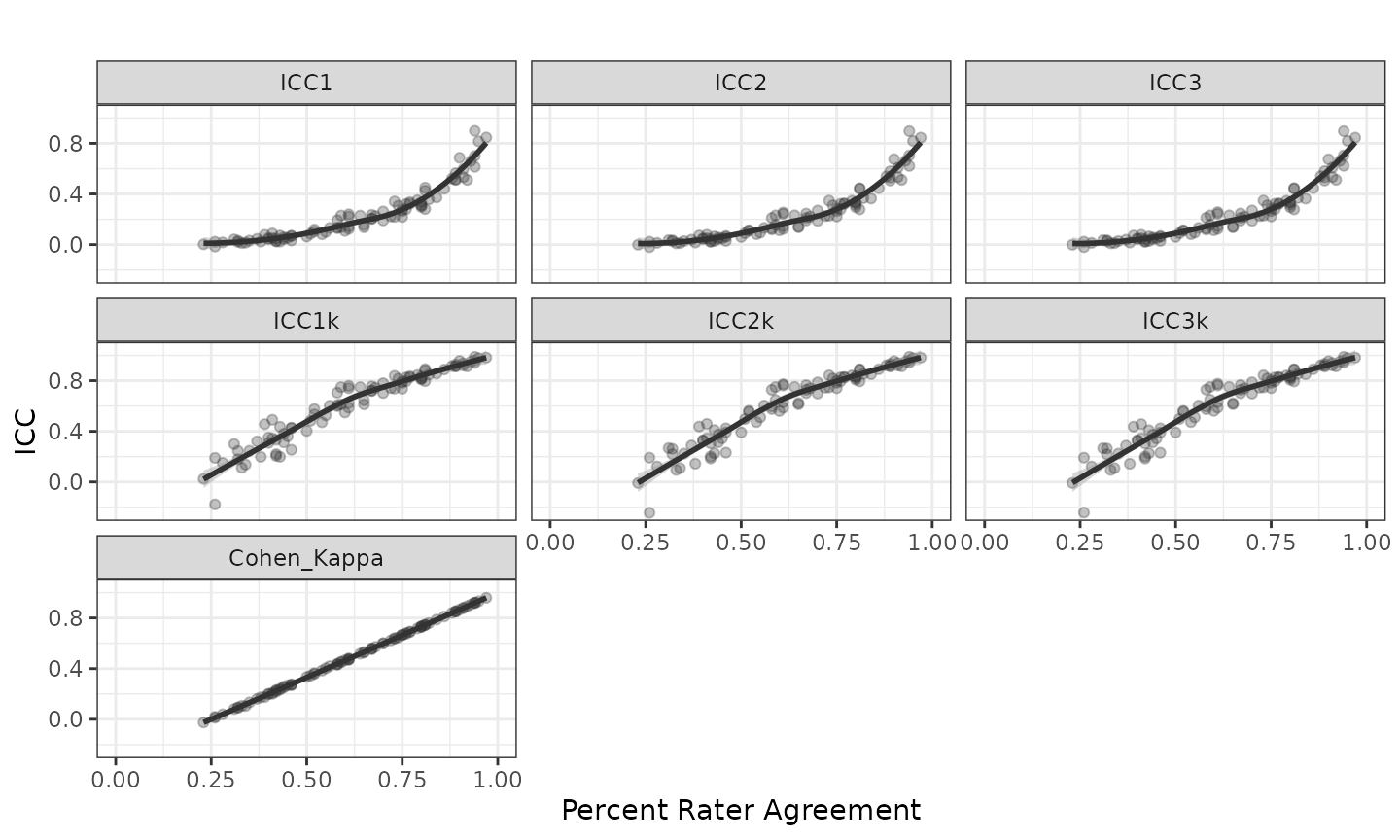

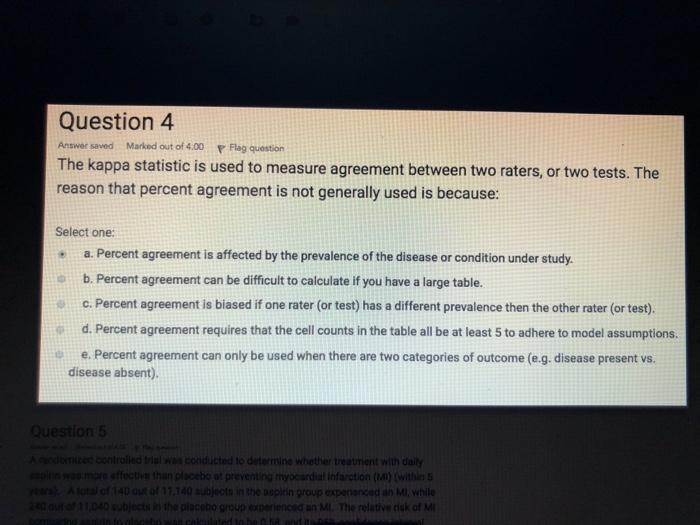

Percent Agreement, Pearson's Correlation, and Kappa as Measures of Inter-examiner Reliability | Semantic Scholar